Hello. This is codingwalks.

In this article, I will continue writing from the previous article.

OpenCV + Python Contour Detection and Labeling - 1

Hello. This is codingwalks.In the field of computer vision, edge detection and labeling are key technologies for image analysis and object recognition. These two technologies can be used to extract the boundaries and shapes of objects in images, and to ana

en.codingwalks.com

6. Practical Application of Outline Detection: Object Tracking

Outline detection technology goes beyond simple boundary detection and is used in various practical applications. One of the representative fields is object tracking. The method of detecting an object using an outline and tracking its trajectory while the object is moving is commonly used in vision systems.

Basic Concept of Object Tracking

Using outline detection, you can detect an object of interest in an image and record the location and movement of the object by tracking the object frame by frame. This can be used to analyze the movement of cars, people, objects, etc.

Basic Flow

- Outline Detection: Use cv2.findContours(), etc. to extract the boundary to detect the object in the area of interest.

- Location Tracking Using Moments: Find the center of the object by calculating the center of the moment and track the center frame by frame.

- Real-time Object Tracking: You can continuously detect the movement of an object to record the trajectory of the object or predict the direction of its movement.

Edge-based object tracking example

Here is a simple code that detects objects on a webcam in real time and tracks their edges.

import cv2

import numpy as np

# Open WebCam

cap = cv2.VideoCapture(1)

while True:

# Reading the frame

ret, frame = cap.read()

if not ret:

break

# Convert to grayscale and apply blur

gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

blurred = cv2.GaussianBlur(gray, (5, 5), 0)

# Binarization (thresholding)

_, thresh = cv2.threshold(blurred, 0, 255, cv2.THRESH_BINARY|cv2.THRESH_OTSU)

# Find contours

contours, _ = cv2.findContours(thresh, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

# Draw contours

for contour in contours:

if cv2.contourArea(contour) > 500: # Ignore objects that are too small

cv2.drawContours(frame, [contour], -1, (0, 255, 0), 2)

# Calculating center of gravity

M = cv2.moments(contour)

if M["m00"] != 0:

cx = int(M["m10"] / M["m00"])

cy = int(M["m01"] / M["m00"])

cv2.circle(frame, (cx, cy), 5, (0, 0, 255), -1)

# Show frame

gray_3channel = cv2.resize(cv2.cvtColor(gray, cv2.COLOR_GRAY2BGR), (0,0), None, .5, .5)

blurred_3channel = cv2.resize(cv2.cvtColor(blurred, cv2.COLOR_GRAY2BGR), (0,0), None, .5, .5)

thresh_3channel = cv2.resize(cv2.cvtColor(thresh, cv2.COLOR_GRAY2BGR), (0,0), None, .5, .5)

frame_resize = cv2.resize(frame, (0,0), None, .5, .5)

result = np.vstack((np.hstack((gray_3channel, blurred_3channel)),np.hstack((thresh_3channel, frame_resize))))

cv2.imshow("Object Tracking", result)

if cv2.waitKey(1) & 0xFF == ord('q'):

break

cap.release()

cv2.destroyAllWindows()

This code is an example of receiving webcam images in real time, tracking the outline of an object, and displaying its center on the screen. After detecting the outline for each frame, it calculates the center of gravity of the outline and tracks the center of the object. This method is mainly useful when the object maintains a certain shape and moves.

Combination with various object tracking techniques

Outline detection is only one method of object tracking. You can build a more sophisticated tracking system by combining various techniques.

- Kalman Filter: It can be used to predict the direction of movement of an object while tracking the position of the object using the outline center.

- Optical Flow: It is a method of tracking the direction and speed of an object, and can be used in conjunction with outline detection to enable more accurate object tracking.

- Background Subtraction: When an object moves on a fixed background, you can extract only the moving object by calculating the difference from the background and detect the outline.

Applications

- Pedestrian Tracking System: It can track the position of a pedestrian by detecting the outline in real time and detect individual objects in a crowd.

- Smart car lane and object detection: By tracking objects or lanes around the vehicle using outline detection, it can be used in collision avoidance systems.

- Sports analysis: By tracking the movement of players during a game and analyzing their trajectories, it helps to establish game strategies.

Limitations of object tracking

Object tracking using outlines is very useful, but it has the following limitations.

- Weak to shape change: If the object is deformed or overlapped, it is difficult to track the outline.

- Sensitive to lighting change: If the lighting changes, outline detection may fail during the binarization process.

- Complex background: If the background is complex, it may be confused with the outline of the object and difficult to track.

To overcome these limitations, we can consider methods that combine outline detection with shape matching techniques or machine learning models.

7. Object labeling after outline detection

After finding the boundaries of objects in the image through outline detection, it is necessary to assign a unique label to each object. Labeling plays an important role in various tasks such as object classification, tracking, and analysis in image processing, and it allows each object to be identified and processed individually.

7.1. Need for Labeling

After edge detection is completed, there may be multiple objects in the same image. Labeling distinguishes these objects and assigns a unique number (label) to each object, enabling tracking of objects in subsequent tasks or statistical analysis. For example, when processing an image with multiple coins, labeling is used to individually track and measure each coin.

7.2. Labeling in OpenCV

In OpenCV, the connectedComponents function is used to identify connected pixel areas in an image and assign labels to each area. This function takes a binarized image as input, identifies connected components, and assigns a unique label to each area.

How to use the cv2.connectedComponents function

retval, labels = cv2.connectedComponents(binary_image)- binary_image: Binarized input image. Here, the result after edge detection is used.

- retval: Returns the number of connected components in the image.

- labels: Returns the resulting image with each component labeled. Each object is identified by a unique number.

Example Code: Object Identification Using Labeling

import cv2

import numpy as np

# Reading and binarizing images

image = cv2.imread('resources/contour.jpg')

gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

_, binary = cv2.threshold(gray, 128, 255, cv2.THRESH_BINARY)

# labeling

num_labels, labels_im = cv2.connectedComponents(binary)

# Colorize labels

label_hue = np.uint8(179 * labels_im / np.max(labels_im))

blank_ch = 255 * np.ones_like(label_hue)

labeled_img = cv2.merge([label_hue, blank_ch, blank_ch])

# Apply colormap (HUE -> BGR conversion)

labeled_img = cv2.cvtColor(labeled_img, cv2.COLOR_HSV2BGR)

# Background is set to white

labeled_img[label_hue == 0] = 255

result = np.hstack((image, labeled_img))

cv2.imshow('Result Image', result)

cv2.waitKey(0)

cv2.destroyAllWindows()

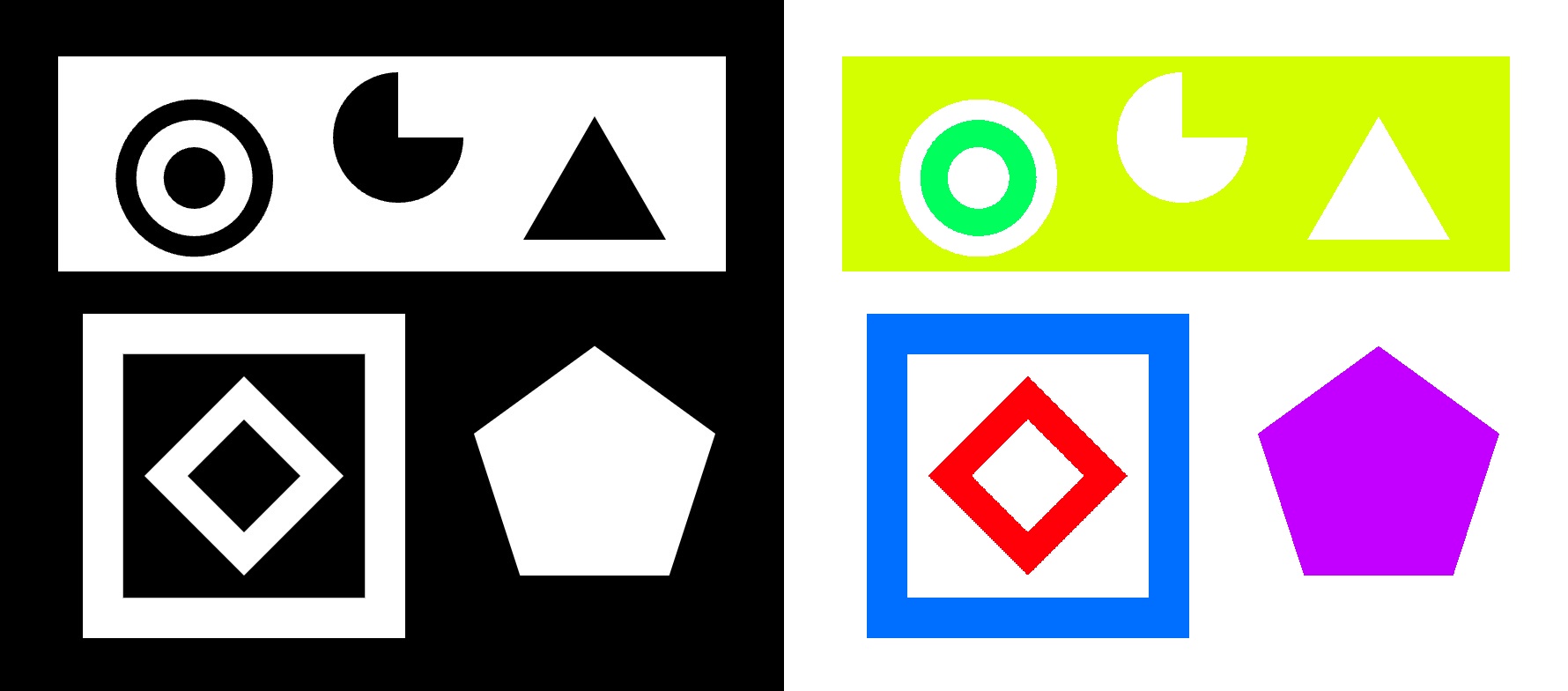

This code is an example of assigning a unique label to each object and then visually displaying the label. It gives a unique color to each connected component, making it easy to identify the object in the image.

7.3. Extracting Information after Labeling

After assigning labels to objects, you can extract various information about each object. For example, you can calculate the area, perimeter, center of gravity, etc. of the object and use them for object analysis. For this, you can use the cv2.moments function of OpenCV.

Extracting Object Information with cv2.moments

The cv2.moments() function extracts geometrical characteristics of an object by calculating image moments from a given outline or binarized object. The main moment values are as follows:

- m00: Area

- m10, m01: Used to calculate center of gravity

- m20, m02: Used to analyze the shape of the object

Example Code: Extracting Moments after Labeling

import cv2

# Reading and binarizing images

image = cv2.imread('resources/contour.jpg')

gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

_, binary = cv2.threshold(gray, 128, 255, cv2.THRESH_BINARY)

# Detecting edges in binarized images

contours, _ = cv2.findContours(binary, cv2.RETR_TREE, cv2.CHAIN_APPROX_SIMPLE)

for contour in contours:

# Calculating moments

M = cv2.moments(contour)

# Calculating center of gravity

if M["m00"] != 0:

cx = int(M["m10"] / M["m00"])

cy = int(M["m01"] / M["m00"])

# Draw contours and mark center of gravity

cv2.drawContours(image, [contour], -1, (0, 255, 0), 2)

cv2.circle(image, (cx, cy), 5, (0, 0, 255), -1)

cv2.imshow("Labeled Objects with Moments", image)

cv2.waitKey(0)

cv2.destroyAllWindows()

This code is an example of detecting the outline of each object, calculating the center of gravity of the object, and displaying it visually. Combining outlines and labeling allows for a detailed geometric analysis of each object.

7.4. Labeling and Object Analysis

Labeling is not just about identifying objects; it also plays an important role in analyzing specific physical information about the object. The following analysis tasks are possible:

- Calculating the area and perimeter of an object: Using the cv2.contourArea() and cv2.arcLength() functions, you can analyze the size of each object.

- Shape Analysis: Using cv2.approxPolyDP(), you can approximate the outline of an object with a polygon and analyze the shape of the object. This is useful for identifying morphological features such as whether the object is circular or rectangular.

- Tracking the center of mass: Using moments, you can track the center of mass of each object. This is very useful for analyzing physical properties or tracking objects. Labeling is the first step in distinguishing multiple objects in an image, and is an essential procedure for extracting various information about each object.

8. Practical Applications of Outline Detection and Labeling

Outline detection and labeling technologies are practically utilized in various industries and research fields. Combining these two technologies to analyze images makes it easy to obtain information about the shape and location of objects, which plays an important role in automated systems. Below are some practical cases where outline detection and labeling are used.

8.1. Defect Detection in Manufacturing

Outline detection is often used in manufacturing to detect defects in products. When detecting geometric deformations or torn parts on the surface of a product, outlines can be analyzed to compare normal and defective products. Labeling can distinguish multiple defects and analyze each defect.

Application Method

- Automated Inspection System: Extracts the outlines of products passing through a conveyor belt in real time, and automatically determines whether there are any defects by analyzing the shape and size of each product.

- Statistical analysis after labeling: By labeling the parts where defects occur, you can identify the frequency and location of defects and diagnose which parts are problematic in the manufacturing process.

8.2. Object detection in traffic systems

Outline detection and labeling are also widely used in traffic systems. In an intersection monitoring system, the outline of a vehicle can be detected through a camera, and the movement of the vehicle can be tracked through this. Labeling is used to distinguish individual vehicles and analyze the speed or trajectory of the vehicle.

Application methods

- Lane and vehicle recognition: When recognizing lanes on the road or tracking vehicles, the boundary of the lane is identified by detecting the outline or the location is tracked through the outline of each vehicle.

- Vehicle analysis: Based on the labeled vehicles, the speed, size, and direction of the vehicle can be analyzed to control traffic flow and identify the cause of traffic accidents.

8.3. Medical image processing

Outline detection and labeling are also frequently used in medical image analysis. For example, when analyzing CT scans or MRI images, the outline of a specific organ or lesion can be extracted, and the size or shape can be analyzed through this. Labeling allows you to accurately identify the location of lesions and analyze them individually.

Application Method

- Measuring tumor size: Detect the outline of a tumor in a medical image, analyze the size or shape of the tumor, and determine the progression of the disease.

- Organ analysis: Detect the outline of an organ to track changes in shape, or label the deformation of a specific part of the organ to perform additional analysis.

8.4. Security and surveillance system

Outline detection is used for object detection and tracking in security and surveillance systems. For example, you can detect people or objects in a surveillance camera, track their movements, and detect abnormal behavior. After outline detection, you can identify individual objects through labeling, and analyze each object.

Application Method

- Intruder detection: If a person intrudes in a security system, you can detect movement through outline detection and track the intruder.

- Tracking people or vehicles: Extract the outline of a vehicle or person from a surveillance camera, and track each object by distinguishing it through labeling. This allows you to monitor abnormal behavior in real time.

8.5. Object Recognition in Robotics

In robotics, computer vision technology is used to help robots recognize and manipulate objects. Outline detection plays an important role in helping robots recognize objects in their surroundings and selectively manipulate them. Labeling allows robots to distinguish and process multiple objects in their environment.

Applications

- Object Recognition and Selection: Used to accurately recognize objects by detecting the outline of an object, and to selectively pick up or move the object.

- Object Alignment and Placement: Labeling helps robots distinguish multiple objects and align or place them in designated locations.

8.6. Sports Game Analysis

Outline detection and labeling are used to track and analyze the movements of players in sports games. Player movements can be detected in real time, and data on each player can be labeled and analyzed. This technology can be used to develop strategies or measure performance during a game.

Applications

- Player Movement Analysis: Track the outline of each player to analyze their location and movement. This can be used to analyze the movement patterns of players in a game.

- Sports Strategy Analysis: By labeling and analyzing the movements of each player after the game, the team's strategy or playing style can be improved.

8.7. Object Recognition of Autonomous Vehicles

In autonomous vehicles, edge detection is an essential technology for recognizing objects around the road. Through labeling, the autonomous driving system can distinguish multiple objects on the road, track the movement of each object, avoid collisions, and plan the path.

Application Methods

- Lane and Obstacle Recognition: Recognize lanes and obstacles using edge lines, and determine the vehicle's driving path based on this.

- Object Tracking: Distinguish pedestrians or other vehicles through edge detection and labeling, and predict the movement of each object to drive safely.

9. Limitations and Improvements of Outline Detection and Labeling

Outline detection and labeling technology plays a very important role in image processing, but there are also some limitations and challenges. Various approaches are being studied to understand and improve these problems, and better performance can be expected as technology advances. Below, we describe the limitations of this technology and some methods to overcome them.

9.1. Errors due to noise

If an image contains noise, many incorrect outlines may be detected during the outline detection process. If noise is recognized as an outline, unnecessary labeling may occur and cause confusion in object separation and analysis.

Solution

- Applying blurring and noise removal filters: Various filters such as Gaussian Blur and Median Filter can be used to remove noise before outline detection. This makes the outline detection results more accurate.

- Setting an appropriate threshold: Setting an appropriate threshold when performing binarization is also a way to reduce sensitivity to noise. Using an automatic threshold setting method such as Otsu’s Binarization can provide better results.

9.2. Difficulty in Processing Complex Backgrounds

In images with complex backgrounds, the boundary between the object and the background can be ambiguous. In such cases, edge detection becomes difficult and the object may not be accurately distinguished.

Solution

- Background Subtraction: Background subtraction techniques can be used to clarify the boundary between the object and the background. For example, if the difference image technique is used to separate the object and the background, the accuracy of edge detection improves.

- Histogram Equalization: If the contrast between the object and the background is increased by adjusting the brightness of the image, edge detection becomes easier.

9.3. Difficulty in Processing Dynamic Objects

Detecting the edge of a moving object in a video is a more complex task than in a fixed image. If the object moves or changes quickly, edge tracking and labeling may not be accurate.

Solution

- Frame Differencing: The edge of a moving object can be extracted by calculating the difference between consecutive frames. This enables accurate edge detection even in dynamic scenes. Powerful algorithms such as Canny edge

- detection: For fast and clear object tracking, it is helpful to use a powerful edge detection algorithm such as Canny edge detection. You can also apply filtering techniques to reduce motion blur.

9.4. Difficulty in distinguishing multiple objects

Distinguishing objects that are overlapping or very close to each other is another challenge in edge detection and labeling. If objects overlap, they may be recognized as one object, which makes it difficult to accurately label each object.

Solution

- Watershed algorithm: If objects overlap each other, the watershed algorithm can clearly separate the boundaries between the objects. This is an effective way to separate overlapping objects.

- Multi-scale Analysis: If the size of the objects is different, you can detect edges at multiple scales to distinguish between small and large objects.

9.5. Difficulty in accurately labeling

Even if edge detection is successful, there are cases where incorrect objects are labeled during the labeling process. In particular, if there are multiple similar objects, confusion may occur during the labeling process.

Solution

- Shape Analysis: After labeling, you can analyze the shape of each object to reduce confusion between similar objects. You can use cv2.approxPolyDP() to approximate the outline of the object with a polygon, and distinguish objects with similar shapes.

- Apply Clustering Technique: You can apply a clustering algorithm based on the size, shape, and location information of the object to distinguish different objects more accurately.

9.6. Limitations of Real-Time Processing

Processing edge detection and labeling in real time may be difficult depending on hardware performance. In particular, processing speed may be slow for large data or high-resolution images.

Solution

- Use GPU acceleration: OpenCV supports functions that can increase processing speed by using GPU. Accelerating edge detection and labeling tasks on a system that supports CUDA can improve real-time performance.

- Multithreading: Multithreading can reduce the processing time by performing edge detection and labeling tasks in parallel.

9.7. Sensitivity to threshold setting

The performance of edge detection is highly dependent on the threshold set in the binarization stage. An incorrect threshold can cause too many or too few edge detections, which can also affect the labeling task.

Solution

- Adaptive Thresholding: When the lighting conditions of the image are uneven, an adaptive threshold can be used instead of a global threshold to detect the edge based on the local brightness of the image.

- Otsu's Method: When binarizing, Otsu's Method can automatically set the optimal threshold, making edge detection more accurate.

10. Conclusion

Edge detection and labeling are essential technologies that are recognized for their value in various fields such as manufacturing defect detection, traffic system object recognition, and medical image processing. Edge detection using OpenCV and Python is a powerful tool that allows users to intuitively implement code and analyze objects in real time.

However, by recognizing the limitations that can occur due to noise, complex backgrounds, and dynamic object processing, and utilizing solutions for these, more accurate analysis is possible. Appropriate combination of techniques such as Gaussian Blur, Otsu's Method, and Watershed Algorithm can greatly improve the performance of edge detection and labeling.

I hope that through this article, you can understand various edge detection methods and labeling techniques, and apply them to actual projects or research to obtain better results. I hope that this will be useful material for those who want to delve deeper into the world of image analysis using OpenCV and Python.

If you found this post useful, please like and subscribe below. ^^

[Codingwalks]에게 송금하기 - AQR

[Codingwalks]에게 송금하기 - AQR

aq.gy

★ All contents are referenced from the link below. ★

OpenCV: OpenCV-Python Tutorials

Core Operations In this section you will learn basic operations on image like pixel editing, geometric transformations, code optimization, some mathematical tools etc.

docs.opencv.org

'Lecture > OpenCV Master with Python (Beginner)' 카테고리의 다른 글

| OpenCV + Python Contour Detection and Labeling - 1 (0) | 2024.10.30 |

|---|---|

| OpenCV + Python Morphology (2) | 2024.10.25 |

| OpenCV + Python Binarization (Thresholding) (0) | 2024.10.25 |

| OpenCV + Python Edge detection and Hough transform (0) | 2024.10.25 |

| OpenCV + Python Filters and Convolutions (1) | 2024.10.24 |